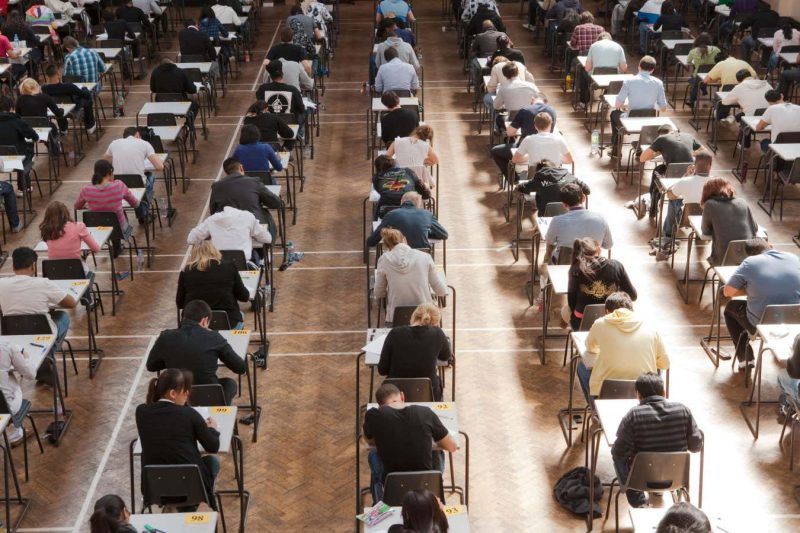

Exams taken in individual make it tougher for college kids to cheat utilizing AITrish Gant / Alamy

Ninety-four per cent of college examination submissions created utilizing ChatGPT weren’t detected as being generated by synthetic intelligence, and these submissions tended to get greater scores than actual college students’ work.

Peter Scarfe on the College of Studying, UK, and his colleagues used ChatGPT to provide solutions to 63 evaluation questions on 5 modules throughout the college’s psychology undergraduate levels. College students sat these exams at residence, so that they had been allowed to have a look at notes and references, they usually might probably have used AI though this wasn’t permitted.

The AI-generated solutions had been submitted alongside actual college students’ work, and accounted for, on common, 5 per cent of the entire scripts marked by lecturers. The markers weren’t knowledgeable that they had been checking the work of 33 faux college students – whose names had been themselves generated by ChatGPT.

The assessments included two kinds of questions: quick solutions and longer essays. The prompts given to ChatGPT started with the phrases “Together with references to educational literature however not a separate reference part”, then copied the examination query.

Throughout all modules, solely 6 per cent of the AI submissions had been flagged as probably not being a pupil’s personal work – although in some modules, no AI-generated work was flagged as suspicious. “On common, the AI responses gained greater grades than our actual pupil submissions,” says Scarfe, although there was some variability throughout modules.

“Present AI tends to battle with extra summary reasoning and integration into info,” he provides. However throughout all 63 AI submissions, there was an 83.4 per cent likelihood that the AI work outscored that of the scholars.

The researchers declare that their work is the biggest and most strong examine of its sort up to now. Though the examine solely checked work on the College of Studying’s psychology diploma, Scarfe believes it’s a concern for the entire educational sector. “I’ve no motive to suppose that different topic areas wouldn’t have simply the identical sort of subject,” he says.

“The outcomes present precisely what I’d count on to see,” says Thomas Lancaster at Imperial School London. “We all know that generative AI can produce cheap sounding responses to easy, constrained textual questions.” He factors out that unsupervised assessments together with quick solutions have at all times been prone to dishonest.

The workload for lecturers anticipated to mark work additionally doesn’t assist their capacity to choose up AI fakery. “Time-pressured markers of quick reply questions are extremely unlikely to lift AI misconduct circumstances on a whim,” says Lancaster. “I’m certain this isn’t the one establishment the place that is occurring.”

Tackling it at supply goes to be near-impossible, says Scarfe. So the sector should as an alternative rethink what it’s assessing. “I believe it’s going to take the sector as a complete to acknowledge the truth that we’re going to must be constructing AI into the assessments we give to our college students,” he says.

Subjects: